“Collaborative Notes” are X’s human-AI hybrid fact-checks

Community Notes has been the unexpected success story of the Elon Musk era at X, a rare example of a feature that actually seems to reduce misinformation rather than amplify it. It’s the platform’s scrappy, crowdsourced answer to the fact-checking problem, and against all odds, it kind of works.

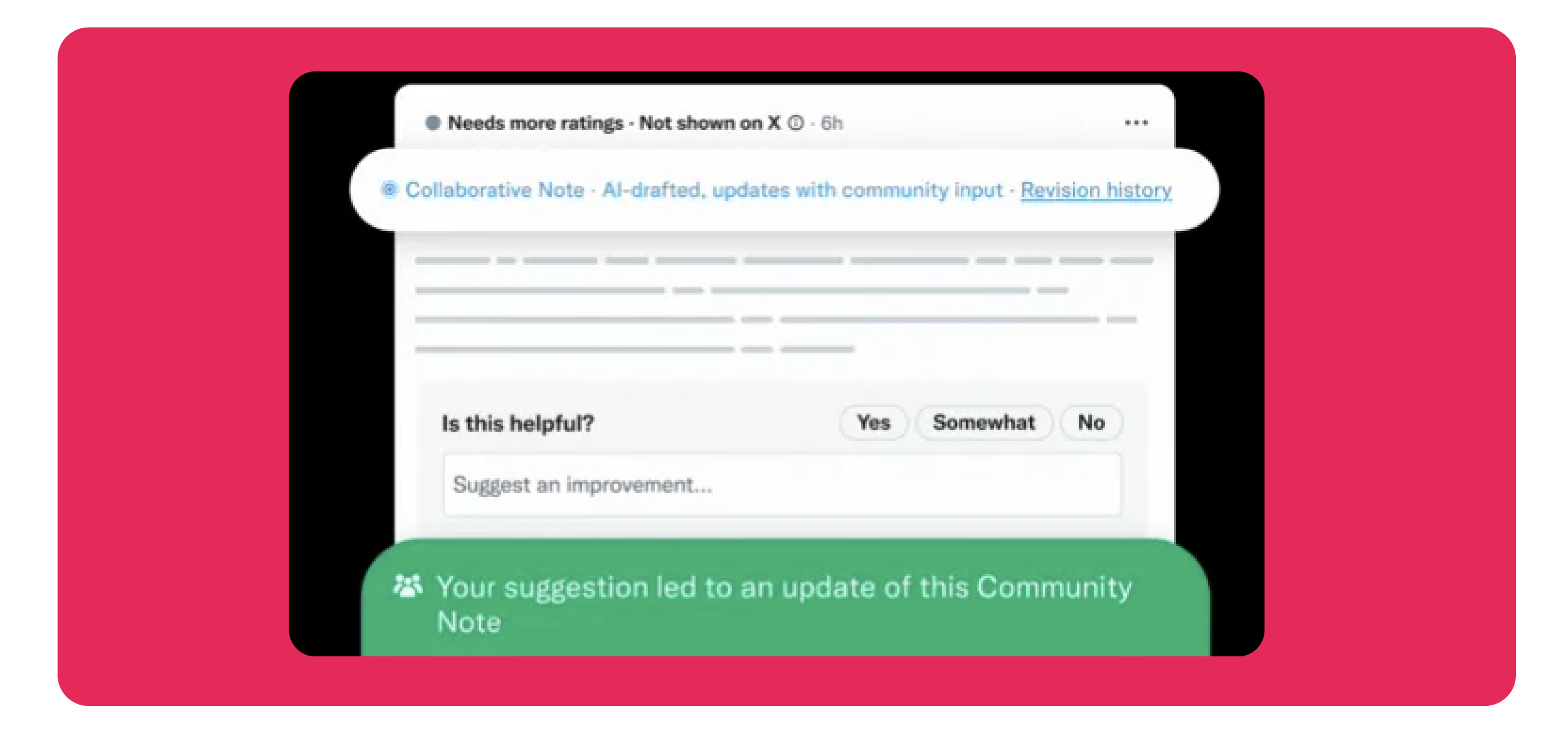

Now X is adding AI to the mix with “Collaborative Notes,” which lets artificial intelligence draft fact-checks that human contributors can then refine through ratings and suggestions.

The fundamental challenge with Community Notes has always been timing. Misinformation spreads at the speed of outrage. By the time a note appears on a viral post, the damage is often already done. Thousands of people have already seen, believed, and reshared the false claim.

AI-generated notes promise to help solve this by drafting fact-checks almost instantly when requested. Human contributors can then rate and refine these AI-generated responses in real-time, theoretically creating a system that’s both fast and accurate. The AI does the heavy lifting of gathering source material quickly, while humans provide the judgment and nuance that algorithms still can’t reliably deliver.

However, there’s quite a Grok-shaped elephant in the room. X will be using Grok, its in-house AI, to generate these notes. This means the AI helping fact-check posts on X has already demonstrated a willingness to spread the exact kind of misinformation the Community Notes system was designed to combat.

Despite the obvious Grok problem, the collaborative element here matters. The AI isn’t acting as the final authority, it’s drafting notes that humans then refine. If the system works as designed, bad AI outputs get corrected by human contributors before they’re shown widely.

And importantly, AI-generated notes are only created when requested, not automatically appended to every post. That means the system isn’t flooding X with algorithmic fact-checks.

To learn more, click here.